Intelligence that clarifies operations

without losing control.

AI doesn’t create operational stability by itself.

It only works when signals, ownership, and thresholds are already defined — and when humans remain accountable for decisions.

We apply AI selectively to compress noise into signal, surface exceptions early,

and make reporting narrative-ready — so leadership sees reality faster without adding risk.

Intelligence That Reduces Coordination — Not Another System to Manage

AI at Whizzystack is not a separate product and not a blanket automation layer.

It is selectively applied intelligence that surfaces signals, compresses complexity,

and highlights exceptions — only where it improves operational clarity.

We introduce AI after the operating model is stable, with clear guardrails and human overrides.

How We Apply AI Without Creating Risk

We don’t “add AI” to workflows that are already unstable. We first stabilize the operating model — then apply intelligence only where it reduces coordination and improves visibility.

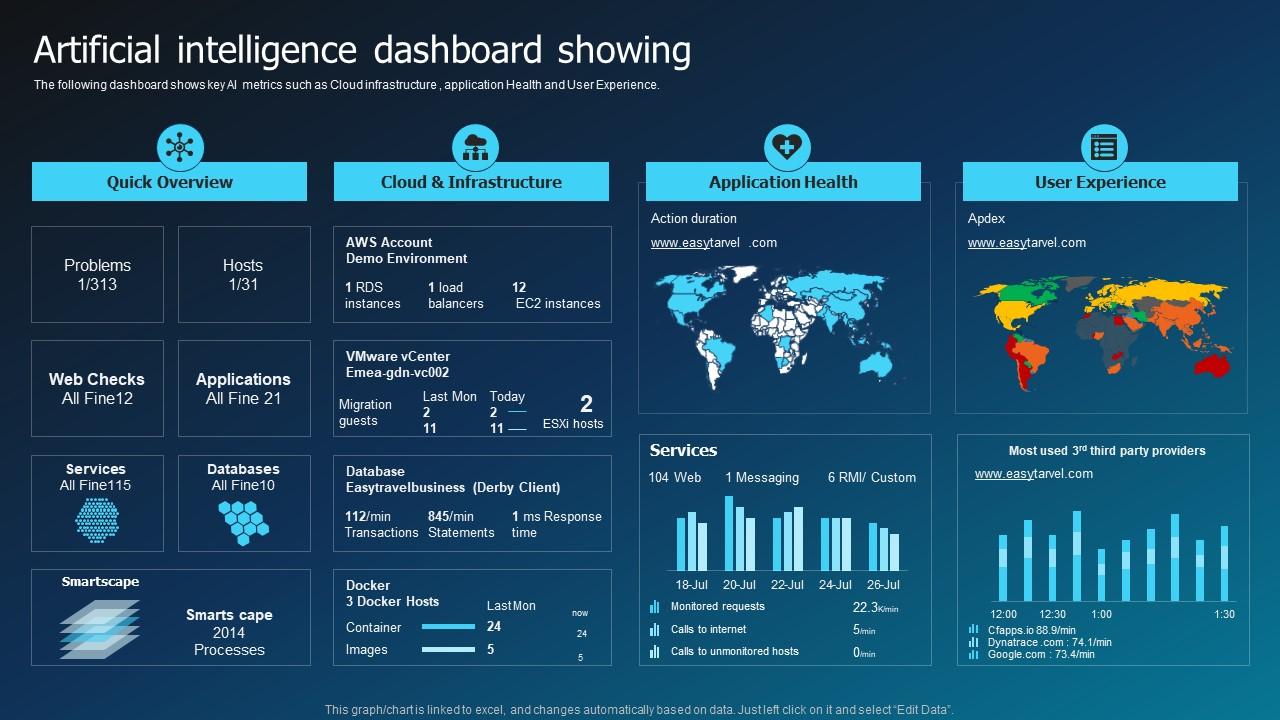

1) Compress noise into signal

AI summarizes long activity trails into clear updates — what changed, where it stands, and what needs attention.

2) Surface exceptions early

Detect SLA drift, stalled work, and repeating breakdown patterns — so teams can intervene before escalation.

3) Make reports narrative-ready

Generate reporting pack summaries that explain the numbers — closures, exceptions, risks, and notable changes.

Before → After (What Changes With Intelligence)

These are operating shifts — not platform claims.

Before

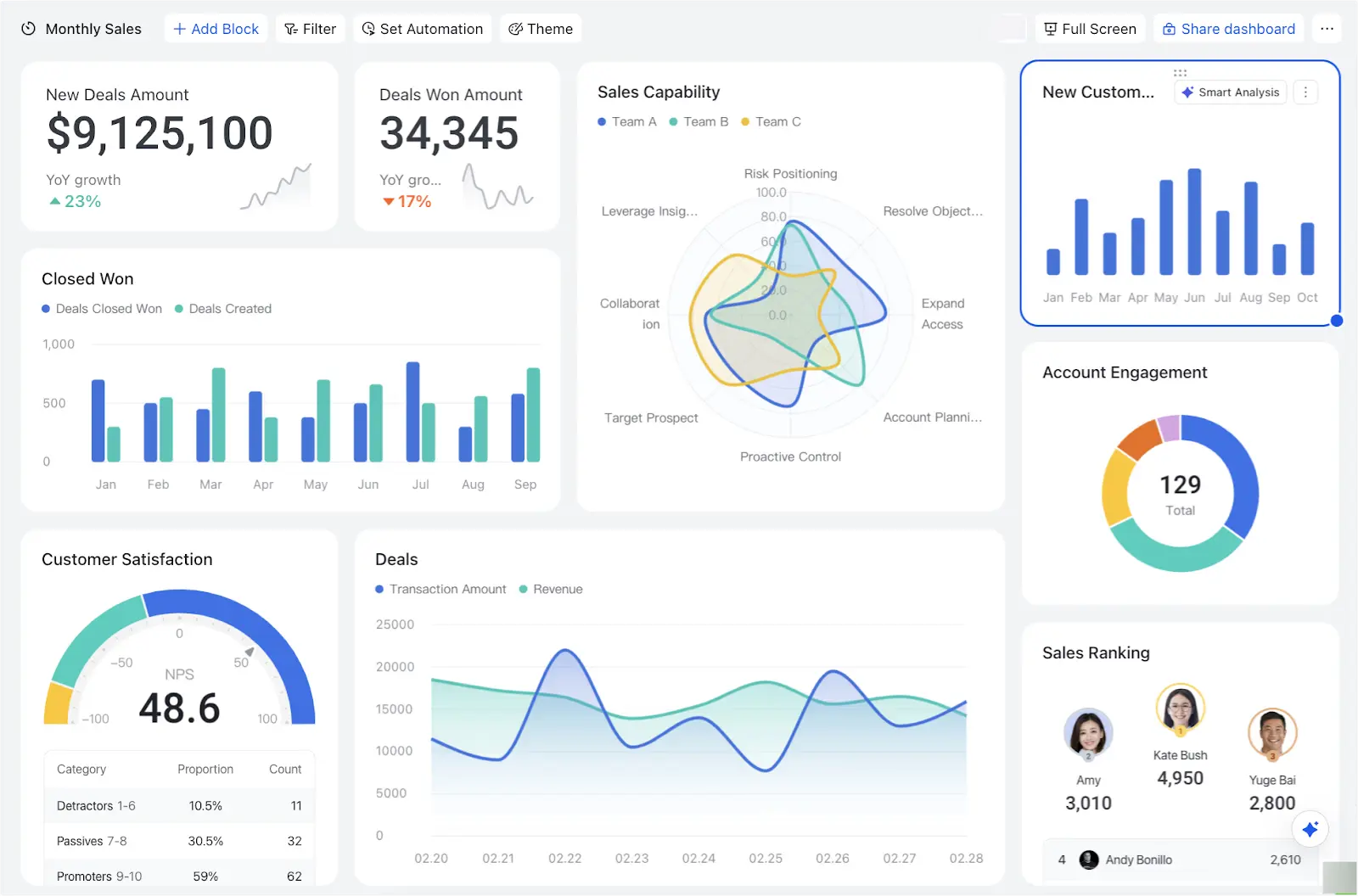

Data-heavy • interpretation-driven- Dashboards exist, but understanding “what changed” requires meetings and manual synthesis.

- Exceptions are discovered late — after SLAs slip and escalations begin.

- Reports show numbers, but lack context — decisions depend on anecdotes.

After

Signal-led • exception-driven- AI compresses complexity into clear updates — what changed, why it matters, what’s next.

- Exceptions surface early with confidence levels and escalation guidance — humans decide.

- Reports gain narrative context — leadership decisions become faster and better grounded.

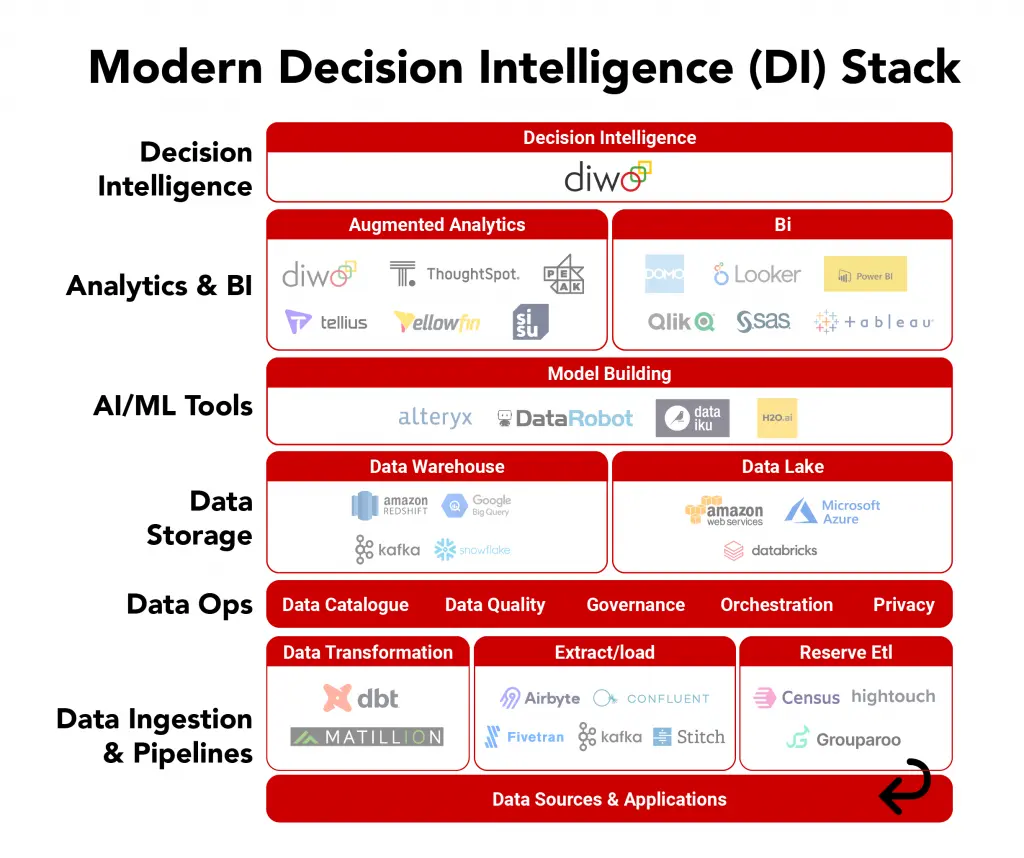

System Layers That Make Intelligence Useful

AI only works when the underlying operating model is stable. We define signals, thresholds, and guardrails during the Pilot — then activate intelligence selectively.

Signals We Typically Deliver

These are directional outcomes observed across operations contexts. Real measurement is defined during the Pilot.

The Intelligence Layer exists to clarify operations — not to replace them. We apply AI only where it reduces coordination, improves visibility, and stays under human control.

Leaders get clear summaries of what changed — without manual synthesis.

Exceptions surface before escalation — SLA drift and stalled work are flagged early.

Managers spend less time interpreting data and more time making decisions.

Where This Works Best

This is not “AI adoption.” It’s selective intelligence for teams that need operational clarity and control.

Works best for

- Ops environments with heavy coordination and long activity trails.

- Teams that already have stable signals and want better exception visibility.

- Leadership that needs narrative-ready reporting and faster decision cycles.

Not ideal if

- The operating model is unstable and inputs are inconsistent.

- The goal is “AI everywhere” without clear guardrails and accountability.

- Leaders want decisions automated without human review.

If You Want Intelligence Without Losing Control

The next step is a Pilot — to stabilize signals, define exceptions and thresholds, and validate where AI summaries and alerts reduce coordination before anything scales.

Start with a Pilot

We map your operating signals, define exceptions, establish guardrails, and validate where intelligence will help — before enabling any AI layer at scale.